Hp network configuration utility for windows server 2016

Windows Server 2016: NIC Teaming Functionality

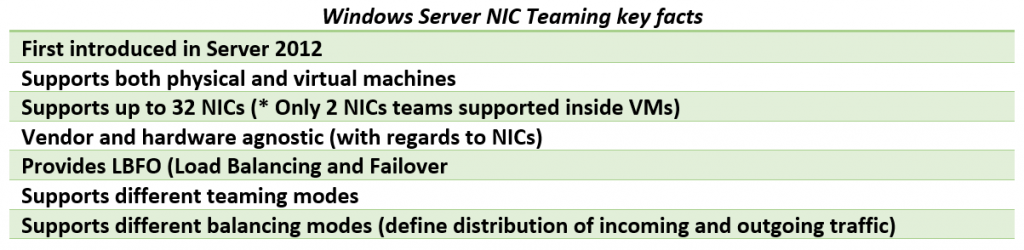

NIC teaming is not something we got with Windows Server 2016, but I just find it interesting to review this functionality as we have it in the current iteration of Windows Server, as usual, touching a bit on the basics and history of this feature.

The NIC teaming feature reached its maturity in Server 2012 R2, and there have been (almost) no major changes in this department in Server 2016, yet if you are just starting out with a practical use of NIC teaming on prepping for any related Microsoft exam you may find it useful to review this feature thoroughly.

Link Teaming was first introduced as OOB feature in Windows Server 2012 with the intent to add simple affordable traffic reliability and load balancing for server workloads. First-hand explanations from pros of this feature by Don Stanwyck, who was the program manager of the NIC teaming feature back then, can be found here. But long story short, this feature gained special importance because of the arrival of virtualization and high-density workloads: knowing how many services can be run inside of multiple VMs hosted by a Hyper-V host, you just cannot afford connectivity loss, which impacts all of them. This is why we got NIC teaming: to safeguard against a range of failures from plain accidental network cable disconnection to NIC or switch failures. The same workflow density pushes for increased bandwidth too.

In a nutshell, Windows Server NIC teaming provides hardware-independent bandwidth aggregation and transparent failover suitable both for physical and virtualized servers. Before Server 2012, you had to rely on hardware/driver based NIC teaming which obliged you to buy NICs from the same vendor and rely on third party software to make this magic happen. This approach had some major shortcomings, e.g.: you were not able to mix NICs from different vendors inside the team, and each vendor had its own approach to the management of teaming, and such implementations also lacked remote management features – basically it was not simple enough. Once Windows Server NIC teaming was introduced, hardware specific teaming solutions fell out of common use as it no longer made sense for hardware vendors to reinvent the wheel/offer functionality, which is better handled by the OOB Windows feature that simply supports any WHQL certified Ethernet NIC.

Like I said earlier, Windows Server 2016 did not introduce any major changes to NIC Teaming except for Switch Embedded Teaming, which is positioned to be the future way for teaming in Windows. Switch Embedded Teaming or SET is an alternative NIC Teaming solution that you can use in environments that include Hyper-V and the Software Defined Networking (SDN) stack in Windows Server 2016. SET integrates some NIC Teaming functionality into the Hyper-V Virtual Switch.

On the LBFO teaming side, Server 2016 added the ability to change LACP timer between a slow and fast one – which may be important for those who use Cisco switches (there seems to be an update allowing you to do the same in Server 2012 R2).

Gains and benefits of Windows Server NIC teaming are somewhat obvious, but what can give you a hard time (especially if you’re in for some Microsoft exams) is the attempt to wrap your head around all possible NIC teaming modes and their combinations. So, let’s see if we can sort out all these teaming modes, which can be then naturally configured both via GUI and PowerShell.

| StarWind HyperConverged Appliance is a turnkey, entirely software-defined hyperconverged platform purpose-built for intensive virtualization workloads. Bringing the desired performance and reducing downtime, the solution can be deployed by organizations with limited budgets and IT team resources. Also, it requires only one onsite node to deliver HA for your applications that make the solution even more cost-efficient. |

| Find out more about ➡ StarWind HyperConverged Appliance |

Let’s start with teaming modes as it is the first step/decision to make when configuring NIC teaming. There are three teaming modes to choose from: Switch Independent and two Switch Dependent modes – LACP and static.

Switch Independent mode means we don’t need to configure anything on the switch and everything is handled by Windows Server. You can attach your NICs to different switches in this mode. In this mode, all outbound traffic will be load balanced across the physical NICs in the team (exactly how, will depend on the load balancing mode), but incoming traffic won’t be load balanced as in this case switches are unaware about the NICs team (this is an important distinction which sometimes takes a while to spot, especially, if you frame your question to Google as something like “LACP VS switch independent performance” or something along these lines).

Switch dependent modes require configuring a switch to make it aware of the NICs team and NICs have to be connected to the same switch. Once again, quoting official documentation: “Switch dependent teaming requires that all team members are connected to the same physical switch or a multi-chassis switch that shares a switch ID among the multiple chassis.”

There are a lot of switches allowing you to try this at home, for example, I have a quite affordable yet good D-Link DGS-1100-18 switch from DGS-1100 Series of their Gigabit Smart Switches. This switch has support for 802.3ad Link Aggregation supporting up to 9 groups per device and 8 ports per group and costs around 100 EUR at this point in time.

Static teaming mode requires us to configure the individual switch ports and connect cables to specific switch ports, and this is why it is called static configuration: we assign static ports here and if you connect a cable to a different port, you’ll break your team.

LACP stands for Link Aggregation Control Protocol and, essentially, it is the more dynamic version of switch dependent teaming, meaning that you configure the switch rather than individual ports. Once the switch is configured, it becomes aware about your network team and handles dynamic negotiation of the ports.

In both of the switch dependent teaming modes, your switches are aware about the NICs team and, hence, can load balance incoming network traffic.

To control how load distribution is handled, we have a range of load balancing modes (you can think about them as “load balancing algorithms”), which include: address hash, Hyper-V port and Dynamic mode which was first introduced in Server 2012 R2.

Address hash mode uses attributes of network traffic (IP address, port and MAC address) to determine which specific NIC traffic should be sent.

Hyper-V port load balancing ties a VM to specific NIC in the team, which may work well for Hyper-V host with numerous VMs on it, so that you distribute their NIC traffic across multiple NICs in your team, but if you have just a few VMs, this may not be your best choice as it leads to underutilization of NICs within the team.

Dynamic is a default option introduced in Server 2012 R2, which is recommended by Microsoft and gives you the advantages of both previously mentioned algorithms: it uses address hashing for outbound and Hyper-V port balancing for inbound, with additional logic built into it, to rebalance in case failures/traffic breaks within the team.

So now that we covered the things you’re supposed to know to make informed NIC teaming configuration decisions or take Microsoft exams, let’s have a look at the practical side of configuring this.

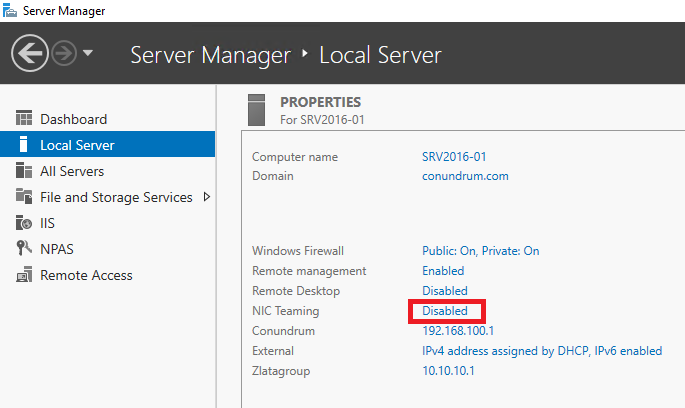

GUI way to manage NIC teams available through Server Manager – you just click on the Disabled link for NIC teaming to start the configuration process:

This will get you into the NIC teaming console where things are straightforward and easy (provided you read the first half of this article):

In case you are doing this VM, you may notice that your choices in Additional properties of New team dialog may be limited to switch independent mode and Address Hash distribution mode, so that two first drop downs are greyed out/inactive – this simply means that we have to review what’s supported and required when it comes to NIC teams inside VMs. From the supportability point of view, only two member NIC teams are supported by Microsoft, and NICs participating in the team have to be connected to two different external Virtual Switches (which means different physical adapters). And with all these requirements met inside VMs, NIC Teams must have their Teaming mode configured as Switch Independent and Load Balancing mode for the NIC Team in a VM must be configured with the Address Hash distribution mode.

After looking at these limitations, it is tempting to say that it is better to go for configuring NIC teaming on the Hyper-V host itself and just don’t use it inside a VM. But I bet there may be the use case for doing teaming inside of a VM – just can’t think of anything right now (if you do – just let me know in the comments to this blog post).

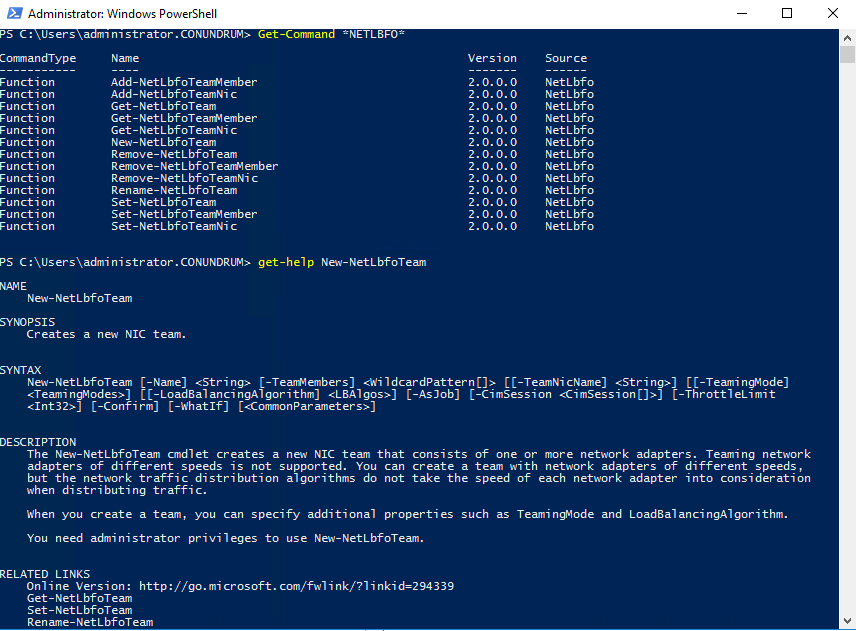

In case you want to manage NET teaming via PowerShell, you can get all the required information in NetLbfo Module documentation on TechNet, or just issue Get-Command *NETLbfo* and further on leverage Get-Help as necessary:

I think this blog post provided enough information to get you started with NIC teaming, especially, if you have not read anything on it just yet. If you had known most of the stuff already, maybe things are clearer on the Switch Independent VS Switch Dependent dilemma now. All in all, in the current versions of Windows Server, Microsoft did a great job making this functionality as easy to use as possible and pushing it forward via the GUI, so that it sits right in Server Manager, literally asking you to set it up. In case you want to go into details with that, I would recommend you read a great document from Microsoft – “Windows Server 2016 NIC and Switch Embedded Teaming User Guide,” which will give you complete and detailed guidance about all facets of NIC teaming in Server 2016 (old versions of this document are still available, too, both for 2012 and 2012 R2 versions of Windows, and the current Microsoft documentation on NIC teaming is also very good).

HP Network Configuration Utility

Have a DL380 G5 with 2xNC373i running w2k3. Tried to update the network configuration utility and have not been able to. Updated the network card drivers 3.0.5.0. Removed the network cards from device manger, rebooted still will not install. Also installed 7.80. Removed the network configuration utility. Still no joy. Comes up with «The installation procedure did not complete successfully. An error occurred during the setup process.» The cpqsetup.log just says it did not install.

Any thoughts appreciated

I have tried downloading and uncompressing the network configuration utility and running cpqsetup.exe and still the same error. It does though check the network card to see what version the driver is as I installed an older network driver version and it said that the utility would not be available until the driver was updated. Both network cards are working. I have even done a bios update on both cards. I will try installing under safe mode and let you know if that works.

Safe mode and safe mode with networking didn’t work as the error was could not find any HP network cards.

first why kind of error you got?

try following this order OK

in device manager remove both NICs

install the latest drivers for NICs

install the lastes NCU

Run the latest firmware only if this is necessary

now try configure the cards

Attached is the print screen of the error when I try to install the network configuration utility. The cpqsetup.log says

Command Line Parameters Given:

Beginning Interactive Session.

Name: HP Network Configuration Utility for Windows Server 2003

New Version: 8.60.0.0

The software is not installed on this system, but is supported for

installation.

The operation was not successful.

I removed the utility via the network control panel, I removed both network cards via device manager and restarted the server. The server detected the network cards, reinstalled the lastest network card drivers 3.0.7.0 (cp007290.exe). Tried installing 8.60 (cp007172.exe) network configuration utility and it will not install.

Windows 2008 R2 на HP DL380 G4p

Если вы читаете эту статью, значит хаба «Антикварное железо» у нас до сих пор еще нет.

У старого железа HP есть только один недостаток: оно работает, работает и всё никак не может сломаться. Но в HP тоже не дураки сидят и делают всё, чтобы стимулировать обновление серверного хозяйства – драйвера под новые ОС не разрабатываются, доработка фирмварей прекращается, так что приходится загонять себя пинками в рай и волей-неволей, но приходится обновляться.

Но у нас тоже есть свой интерес. Использовать старое оборудование, на тех задачах, которые ему по плечу – это наша первейшая обязанность, тем более, что есть свободные лицензии Windows 2008 R2. Попытаемся натянуть сову по имени Windows 2008 R2 на наш старый глобус DL360 G4p (P54).

Следствие ведут колобки

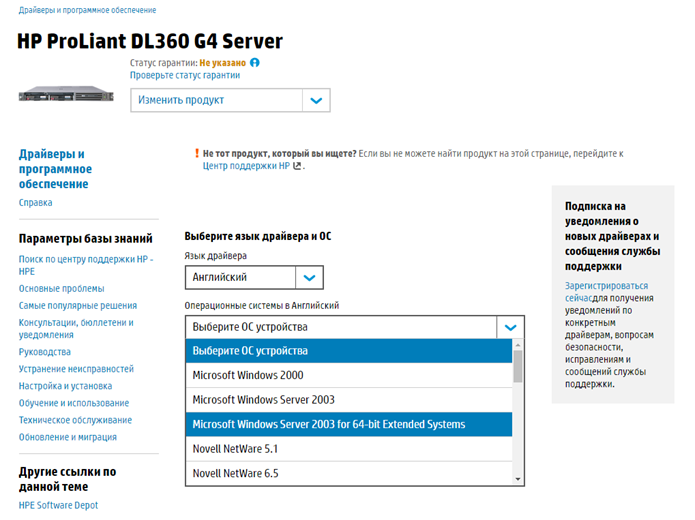

В списках поддерживаемых систем на странице сервера DL360G4, ОС Windows 2008 R2 уже не значится.

Если мы посмотрим в матрице поддержки,

h17007.www1.hp.com/us/en/enterprise/servers/supportmatrix/windows.aspx#.Vufcl-KLTcs

то официально W2K8 R2 поддерживается только для поколений G7 и свежее. Про G4 разговора нет.

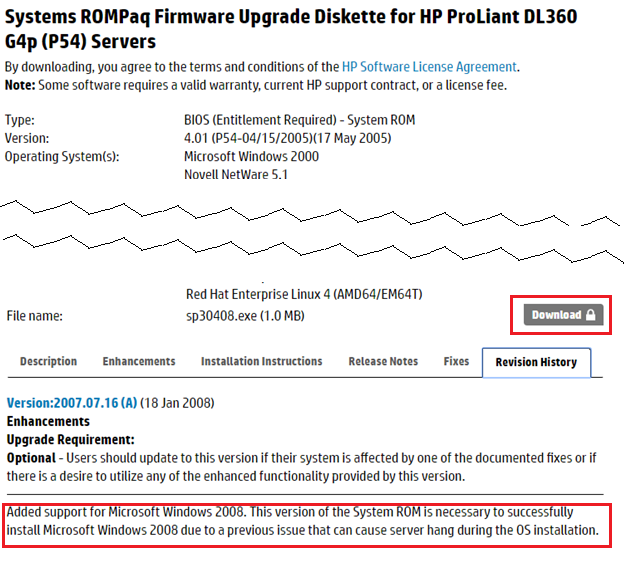

И тем не менее, если зайти на страницу BIOS для G4p, то увидим следующее:

Т.е. BIOS для G4p (P54) (sp30408.exe), поддерживающий инсталляцию W2K8, существует, но находится «под замком» и доступен только при наличии контракта на сопровождение.

Перед началом работ следует:

1.Пройтись по серверу последним доступным для этой модели Firmware CD или подсунуть его пред тёмны очи HP Smart Update Manager и поднять последнему веки.

2.Купить контракт на сопровождение системы и законным образом получить свежую версию BIOS для G4p, скачать и поставить BIOS 2007.07.16A.

3.Проапгрейдить iLO2 до версии 1.92.

Таинственные артефакты и особая древняя магия

Если у вас сервер оснащён дисководом FDD, то перед тем как совать в него дискеты, привод надо снять и продуть сжатым воздухом от пыли. Конструкция сервера такова, что через дисковод и CD-привод вентиляторами прокачивается большой объем воздуха и вся пыль оседает внутри устройств. Если приводами пользовались крайне редко, то залежи пыли работают как хороший наждак.

Лучше разворачивать апдейты на USB-флешке, но при использовании штатных средств HP требуется флешка размером НЕ БОЛЕЕ 2ГБ!

Установку W2K8 делал мой коллега, и, с его слов, там проблем не возникло.

Скрещивание ужа с ежом или конь Тугеза

А вот с объединением адаптеров в команду (team) пришлось немного повозиться. Сервер оснащен двумя встроенными сетевыми адаптерами NC7782, собранными на чипе Broadcom BCM5704CFKB, которые интересно объединить в команду.

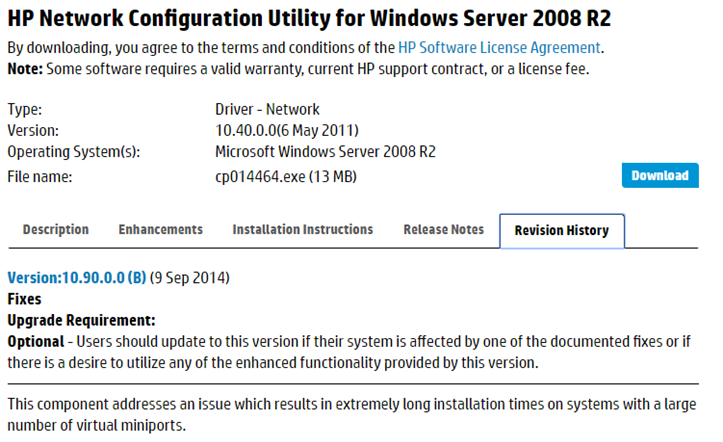

Традиционно team собирается при помощи HP NCU – Network Configuration Utility. NCU – это отдельный продукт, и его версия под W2k8 выложена на сайте HP и доступна к скачиванию без ограничений.

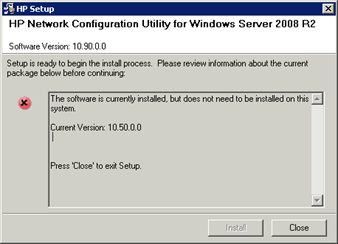

Утилита Версии 10.50.0.0 на сервер встала, но не увидела ни одного сетевого адаптера NC7782. Самая свежая версия 10.90.0.0 и вовсе выдала вот такое интересное сообщение:

Что следует понимать как «ПО как-то инсталлировано, но как — не понятно и делать этого — точно не стоило».

А что у нас драйвера? А драйвера на сетевые карты у нас встали при инсталляции W2K8. Возникло предположение, что NCU требуются драйвера от HP.

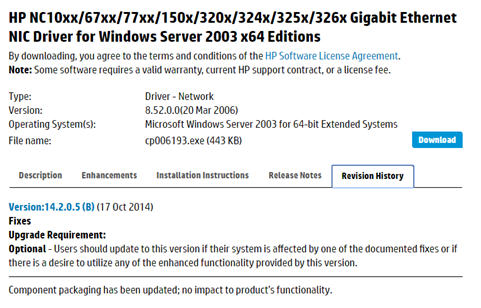

А где взять драйвера, с учетом того, что формально их под 2008 R2 для G4p больше не делают? «Да, вот же они! Положила» не беда, что они под Windows 2003 x64.

cp006193.exe

Конечно, по традиционной схеме они не встанут. cp006193.EXE при запуске рапортует, что «гранаты у нас не той системы», и работать они тут не могут, потому что не хотят.

Но вручную ставятся. cp006193.EXE – самораспаковывающийся архив, который нормально разворачивается при помощи Winrar. Разложим его в отдельный каталог и дальше «Система / Диспетчер устройств / Сетевые карты / Драйвер / Обновить… / Установить вручную/ показываем на каталог. Драйвер меняется на лету, даже терминальная сессия не прерывается. «2003 x64 на 2008 R2»

Но и это не помогло, NCU не увидело свои собственные драйверы. Возможно, помогло бы занижение версии NCU, но было решено попробовать другое решение.

Другие изготовители серверов, в частности Dell и Fujitsu для объединения в team используют ПО, разработанное Broadcom, под названием BACS (Broadcom Advanced Control Suite). На настоящий момент доступна версия 4.

У HP она тоже есть. cp022114.exe

Но HP его использование видит несколько по другому – организация таргетов iSCSI и FCOE, на требуемых нам платформах. BACS для HP на G4 отказался запускаться, «Нету,- говорит –у вас для меня нужного железа». Да и не очень-то и хотелось.

Где BACS4 скачивается с сайта Broadcom я так и не нашел, ссылка на management applications ведет на страницу 404. Broadcom пишет, что пакет поставляется на CD вместе с сетевыми картами вендоров. Весь пакет выложен на сайте Fujitsu:

Или можно поискать по интернету файл FTS_BroadcomAdvancedControlSuite4BACS_14831_1064191.zip.

2011 год, но работает.

Приступаем к установке BACS4.

«HP – не HP, Абонент – не абонент»

— такими глупостями BACS4 не занимается. Ему всё равно: «Fujitsu — не Fujitsu»

Дальше всё просто «Команды / Создать». Называем Team1. Добавляем оба доступных адаптера. (Физически в момент сборки тима, во избежание проблем, к сети подключен только один кабель. После сборки тима подключаете второй кабель и всё работает как обычно)

Если драйверы ему не понравятся, BACS4 про это скажет. На HP драйверы он выругался и сказал: «Хочу NDIS6, NDIS5 – не хочу!»

На сайте Broadcom взяли свежие драйвера под BCM5704 ( Версия 17.2.0.2 от 03.07.2015 ) и поставили их на G4.

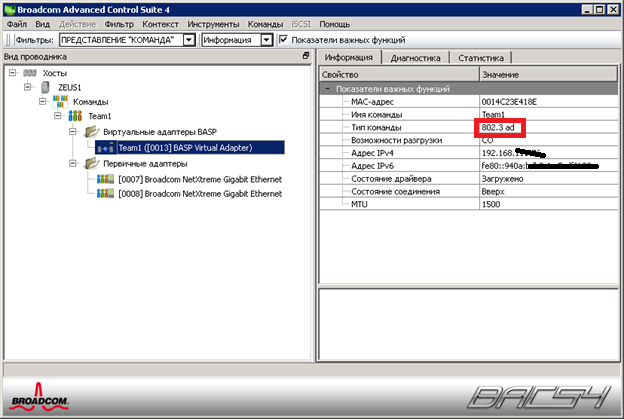

Так выглядит интерфейс BACS4

Сервер подсоединен к HP Procurve 2910, так что при установке надо указывать 802.3 ad, другой протокол портов Procurve не понимают (со стороны коммутатора на портах собран LACP).

Всё Работает, полет нормальный.

Про NIC teaming, White paper от HP. Полезное чтение.